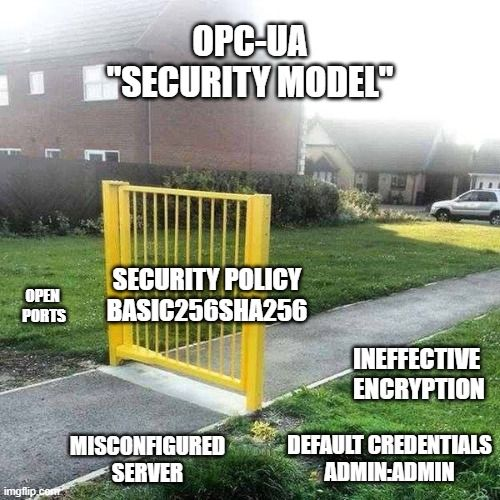

It couldn't get much worse. On one hand, manufacturers are wasting substantial amounts of time and money attempting to implement OPC UA. On the other hand, it's driving innovation because its shortcomings are almost comically obvious that more and more companies feel fooled by established market vendors and begin seeking alternative solutions. They are frustrated with the status-quo and want to advance into the 21st century.

But there’s no need to just hate and spit anger. Below, in our opinion, are the top 3 reasons why OPC UA is not delivering on its promises:

Reason 1: Practical Applications of OPC UA Can Be Highly Unreliable

During our time as a System Integrator, and later as a product provider with UMH, we've encountered significant challenges with OPC UA.

Customers typically come to us with new machinery equipped with OPC UA, expecting seamless integration into their current setups due to its standardized nature. However, we've frequently observed that machine vendors and system integrators have a limited understanding of OPC UA. This often leads to implementations and configurations that cause more problems than they solve.

When security measures were in place, they were often rudimentary, defaulting to simple username/password combinations like 'admin:admin'. Exceeding 10 - 20 data points per second would frequently cause the system to hang, necessitating a complete power cycle for a restart. At times, systems would become unresponsive, requiring regular power cycles of the involved edge gateways.

Upon discussing our experiences on LinkedIn and creating memes, as well as conversing with (potential) customers, we noticed a divided audience:

- The Frustrated Users: This group, the majority, shared our frustrations. They supported our views and encouraged us to continue our efforts. Many from the Unified Namespace community also shared these sentiments.

- The Unaffected Ones: This group dismissed our challenges, suggesting incompetence on our part for not being able to properly utilize OPC UA. This group splits further into those who promote OPC UA without having hands-on experience and those genuinely unaffected by OPC UA’s pitfalls.

Why this disparity? It often boils down to the level of control and familiarity one has with their systems. Those who face no issues with OPC UA typically have the leverage to impose rigorous standards on their system integrators and machine vendors, direct access to modify PLC codes, or are in a position to dictate how the OPC UA server should be configured in their machines.

But that is not the majority of the companies. The majority of the companies must live with whatever the machine builder and system integrator provides them. They can put up some standards, but very often it is not enough to simply say “we want to have all data of the machine available via OPC UA”.

It's possible to argue that this isn't directly the fault of OPC UA. However, in our view, it remains a significant concern. The developers of OPC UA were presumably aware of the market dynamics and the average skill level of the professionals likely to work with OPC UA. Despite this understanding, they opted for a protocol of considerable complexity, providing ample room for technical and human errors of all kind.

This unwarranted complexity re-emerges as a central issue in both reason 2 and reason 3, further compounding the challenges faced by users.

Reason 2: It is a Security Nightmare

One of the foundational rules of cryptography is to lean on established and simple best practices and libraries instead of attempting to reinvent the wheel. OPC UA, however, appears to have missed this memo, opting to chart its own course (and again, increase complexity). The number of potential security vulnerabilities lurking in critical infrastructure due to OPC UA's unique approach is concerning.

Everyone: “let’s use SSL/TLS”. OPC UA: “Let’s reinvent the wheel!”

OPC UA does not use SSL/TLS. Instead, own protocols have been developed. (Actually, that is not fully true. Because OPC UA is not a single protocol, but actually a group of protocols, there are some protocol groups that actually talk via SSL/TLS. But the main one via TCP/IP does not, so let’s focus on that. We will get back to it, in reason 3)

This decision forces each OPC UA implementation to reinvent security measures. Furthermore, OPC UA specifies not just a single method for securing connections between two entities but multiple ones, depending on the chosen protocol (refer to reason 3 for more details). This implies that despite receiving BSI approval for the protocols and security measures themselves, the practical implementations might still be insecure.

This problem is made worse, as a notable gap in the OPC UA ecosystem is the lack of an official, lightweight, and user-friendly reference implementation. The offering by Microsoft is constrained by a dual license (GPL 2.0 and RCL), severely limiting its use in commercial applications. This dual licensing also raises questions about the fairness and openness of the OPC UA framework, particularly for non-foundation members who are subjected to the restrictive GPL, contrasting sharply with foundation members' access to the more permissive RCL.

Despite these hurdles, several unofficial OSS libraries strive to bridge this gap, including open62541, java-milo, python-opcua, and node-opcua. The BSI report particularly recognizes open62541 as a notably robust and mature implementation.

Yet, all these libraries face the challenge of implementing security features from the ground up. Moreover, developing a new library is no small feat due to, as you might have guessed, the complexity of OPC UA.

OPC UA is Typically Misconfigured (And It's Hard to Configure It Properly!)

The security model of OPC UA has become synonymous with complexity and misconfiguration.

The claim of "encryption" within OPC UA provides a mere illusion of security rather than the reality (see also our meme). Merely toggling OPC UA to “encrypted” status can be deceptive, offering users a false sense of security without the backing of robust security measures.

The root of the problem? The creators of the OPC-UA standard. They designed a system with a complexity that assumed a level of IT proficiency not commonly found in the industrial environment. Proper configuration, which might prevent common threats like Man-in-the-middle attacks, requires a deep understanding of public key infrastructure (PKI)—expertise that goes beyond the skill set of many IT professionals and automation engineers in the manufacturing industry.

The BSI's analysis offers a sobering confirmation, stating,

"A secure default configuration is not a main target of the majority of products in this survey, which means that many of the security recommendations and requirements of the specification are not implemented in practice"

- German Federal Office for Information Security

A study by German security researchers emphasizes this, noting that "In total, 92% of all OPC UA servers show [security] configuration deficits." and suggesting that the complexity of the OPC UA security settings was the cause.

This acknowledgement highlights a glaring issue: OPC UA's complexity often renders it insecure in practical applications.

This complexity is what brings us to our last reason.

Reason 3: OPC UA Managed to Standardize Without Actually Standardizing Anything

The inception of OPC UA was driven by a collective desire for a unified protocol across the industry—a noble endeavor indeed. However, the reality that unfolded was quite the paradox.

While everyone agreed on the need for standardization, no one seemed to want deviate from their established practices. The implementation in its current form strongly suggest that there were forces involved which seemed to have actively worked against standardization, introducing complexities and edge cases that inflated the standard rather than streamlining it.

The result? A standard so broad and accommodating that it essentially allows for any possibility. The official standard, is a testament to this.

Let’s delve into a couple of aspects of this standard to illustrate the point.

Aspect 1: Overwhelming Data Modeling Options

The first aspect to address is the overwhelming variety of data types, objects, and structures in OPC UA. The scope is so vast that delving into it for this article presents a real challenge. Here, we aim to outline our findings and provide references to underscore our point that OPC UA has standardized in a manner that paradoxically doesn't seem like standardization at all.

Initially, the concept appears straightforward: there are just 25 base data types. Arguably, many of these data types might be seen as redundant in the context of a high-level protocol like OPC UA in 2024. For instance, the distinction between signed/unsigned or the various bit lengths could be streamlined. But for the sake of argument, let's accept these as they are; if this were the extent of it, the system would be reasonably manageable.

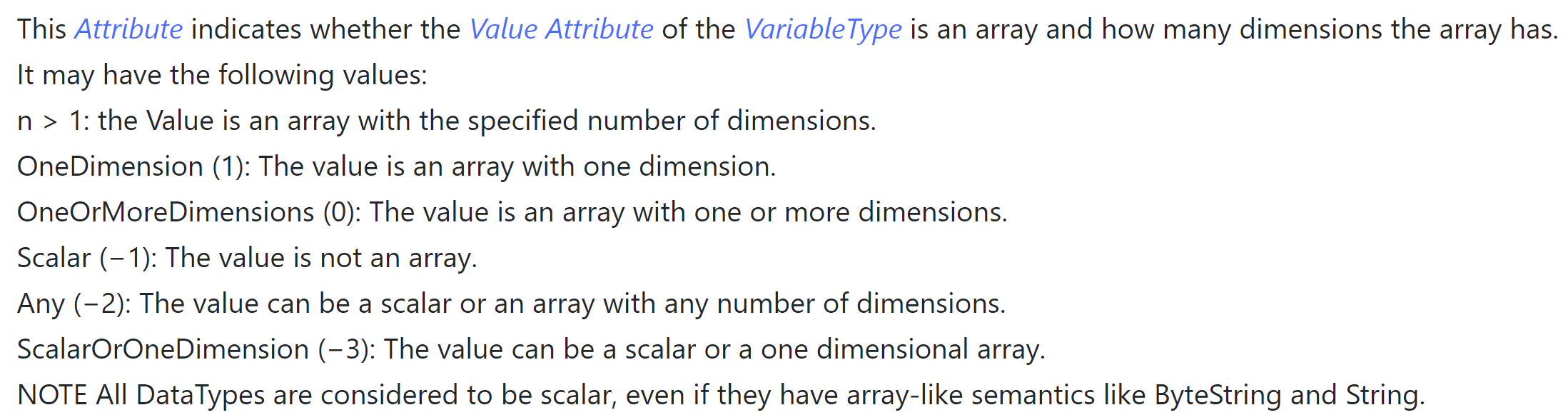

The complexity escalates with the introduction of arrays derived from these base types—an understandable feature. Yet, the complexity begins here. The "ValueRank" of a variable can be set to various levels, from "scalar (-1)" indicating no array, to higher numbers specifying the array's dimensionality. The system also allows for ambiguity with settings like 0 (undefined dimensions), -2 (variable or array of any dimension), or -3 (variable or one-dimensional array).

Big sigh…

Now, imagine trying to parse this data automatically for use in a Historian, a Data Lake, or even a simple dashboard. You'd have to add a bunch of logic on top of existing libraries just to make sense of and use the data.

The complexity doesn't stop there. Take the "Variant" data type, which can be anything. You can make "Variant Arrays" where the first item is an XML file, the next is a number, then a string. With "ValueRank," you can even make this array change shape, sometimes being a single item, other times a 34-dimensional array of XML files, DateTimes, and numbers.

In OPC UA, it is possible to have a variable aka "tag" that regularly changes its shape between being a variable, a normal array, and a 34-dimensional array with varying datatypes of XML files, DateTimes, and numbers.

"But UMH, those are just rare cases, no one actually uses them." Really? We've seen some strange data models even in simple setups. And if you buy 500 machines from different makers, each programmed by someone else aiming to be "OPC UA compliant," you're bound to encounter all sorts of oddities.

We wanted to talk about Objects and Structures in OPC UA, starting with variables and arrays. But the complexity and sheer absurdity we’ve run into just at this stage clearly show the problem: OPC UA gives you too many ways to model data, making what should be a straightforward process anything but.

Why not keep it simple? Use basic types like strings, numbers, and bytes. Let people make one or multi-dimensional arrays from these. Organize them with folders. That’s all you need for most things.

Aspect 2: It’s not one protocol, it’s actually over 27 different protocols.

When discussing standardized connectivity for devices, OPC UA's approach seems fragmented. There's the Client-Server model with TCP/IP, HTTPS, Websockets, and the Pub-Sub model with UDP multicast, broadcast, unicast, Ethernet (a protocol that eschews typical IP or UDP headers), AMQP, and MQTT.

Each transport protocol comes with its own set of encodings (OPC UA Binary, OPC UA XML and OPC UA JSON) adding layers upon layers of options. With this alone we are already talking about 27 different combination within the same 'standardized' protocol—and that's before we even touch on security, session handling or any data modeling.

This leads to a conundrum where two devices, both speaking OPC UA, may find themselves incapable of communication because they're essentially using different languages.

The standard's complexity does not foster interoperability but hinders it, leading to situations where 'standardized' is anything but.

In summation, OPC UA’s approach to standardization has resulted in a convoluted ecosystem where the promise of interoperability is undermined by an overabundance of configurations and options. The quest for a universal protocol has, ironically, given rise to a new tower of Babel in industrial communications.

What now?

OPC UA has become emblematic of the challenges within manufacturing, yet this also opens a door for opportunity. The shortcomings of established vendors, who appear trapped in methodologies of the 90s, have inadvertently paved the path for innovation. Among the frustrated users of OPC UA, movements like the Unified Namespace community are gaining momentum and advocating for change.

If any member of the OPC UA Foundation receives this message, please consider the following proposal: simplify your standard by removing 95% of its complexity, while preserving its essential functionalities. Strive for simplicity and user-friendliness, making it so straightforward that it requires little to no configuration and guarantees security automatically. Keep in mind that the majority of the industry is still struggling with basic tasks like extracting crucial data from production machinery. Let's focus on empowering these users first before tackling more advanced challenges.

In the face of such frustration, what should one do?

In the face of such frustration, what should one do? If OPC UA is your only option, then resilience is required to navigate this complex landscape (or a lot of alcohol to drown ones frustration). However, if there's a choice, it might be prudent to consider alternative protocols. Options that are straightforward, secure, and time-tested exist and can often meet the majority of operational needs:

- HTTP / HTTPS for client-server communication offers simplicity and ubiquity.

- MQTT for pub-sub systems provides lightweight and efficient messaging.

- Traditional protocols like Siemens S7 (s7comm), Modbus, and others, while not the newest, offer reliability and simplicity. There is no need to additionally put an OPC UA protocol converter on top of this. When you're tasked with connecting over a thousand PLCs globally, the priority often shifts to dependable data extraction over cutting-edge features.

While these alternatives may not cater to every niche requirement, it's worth considering whether such edge cases represent true needs or merely theoretical exercises. After all, if a scenario hasn't arisen in decades of industry practice, perhaps it's not as critical as once thought.

We invite the community to engage in this discussion. Share your thoughts on LinkedIn, debate with peers, and spread awareness about the realities of industrial protocol integration.

Disclaimer: As a company providing IIoT solutions, we recognize the entrenched status of OPC UA in the market. Avoiding it entirely may not be feasible, but that doesn't mean we have to accept the status quo passively. We stand with our community and enterprise customers, committed to easing their burdens while still holding onto a vision of a future where OPC UA's complexities are a thing of the past.