Are you looking to connect your Node-RED instance to SAP SQL Anywhere using an ODBC driver? This guide provides step-by-step instructions on how to customize a Node-RED Docker instance to connect to SAP SQL Anywhere. By following these instructions, you can build and push the container to your repository and add it as a custom microservice in UMH Helm chart. Let's get started!

Instructions

- Clone the Node-Red Docker repository from Github using the following command:

git clone https://github.com/node-red/node-red-docker.git - Change the directory to docker-custom using the command:

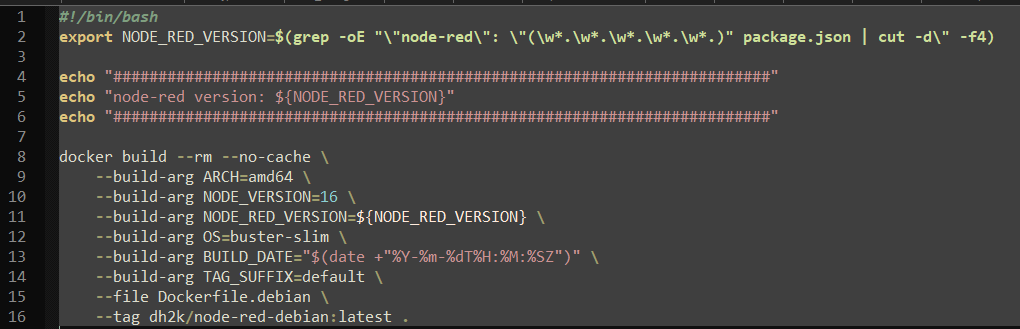

cd node-red-docker/docker-custom - Open the docker-debian.sh file and update the node version and default tag as per your preference. In this case, the tag is changed to a custom repo and the NodeJS version 16. As base image you can also use

bullseye-sliminstead ofbuster-slim

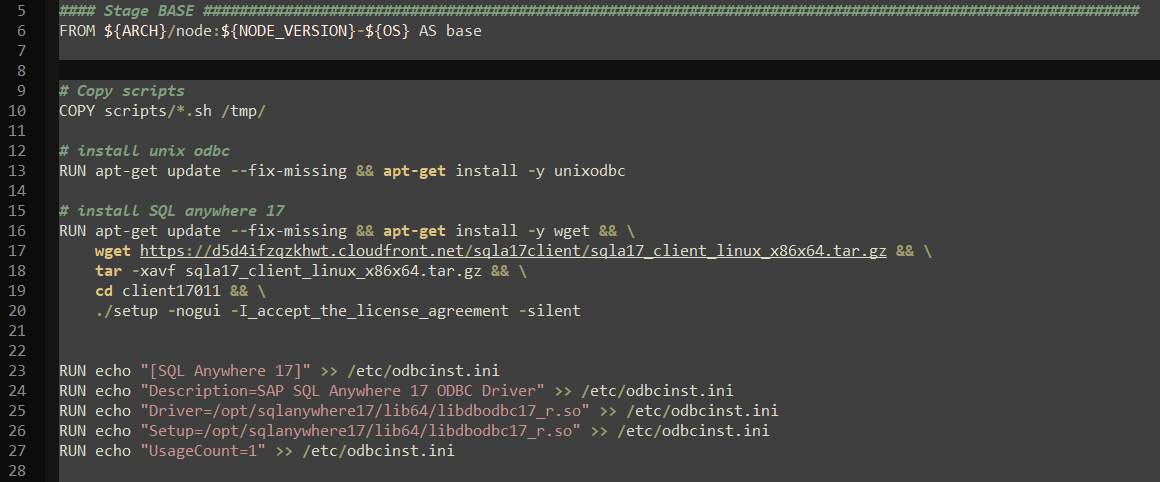

- Next, modify the Dockerfile.debian file to install the UnixODBC driver manager and the SQL Anywhere version of your choice. In this case, we are installing version 17. Update the driver template settings according to your driver.

# install unix odbc RUN apt-get update --fix-missing && apt-get install -y unixodbc # install SQL anywhere 17 RUN apt-get update --fix-missing && apt-get install -y wget && \ wget https://d5d4ifzqzkhwt.cloudfront.net/sqla17client/sqla17_client_linux_x86x64.tar.gz && \ tar -xavf sqla17_client_linux_x86x64.tar.gz && \ cd client17011 && \ ./setup -nogui -I_accept_the_license_agreement -silent # create a driver template RUN echo "[SQL Anywhere 17]" >> /etc/odbcinst.ini RUN echo "Description=SAP SQL Anywhere 17 ODBC Driver" >> /etc/odbcinst.ini RUN echo "Driver=/opt/sqlanywhere17/lib64/libdbodbc17_r.so" >> /etc/odbcinst.ini RUN echo "Setup=/opt/sqlanywhere17/lib64/libdbodbc17_r.so" >> /etc/odbcinst.ini RUN echo "UsageCount=1" >> /etc/odbcinst.ini - Export the environment variables to allow Node-Red to use the shared system files. These variables can be found in the installation folder for SQL Anywhere, usually

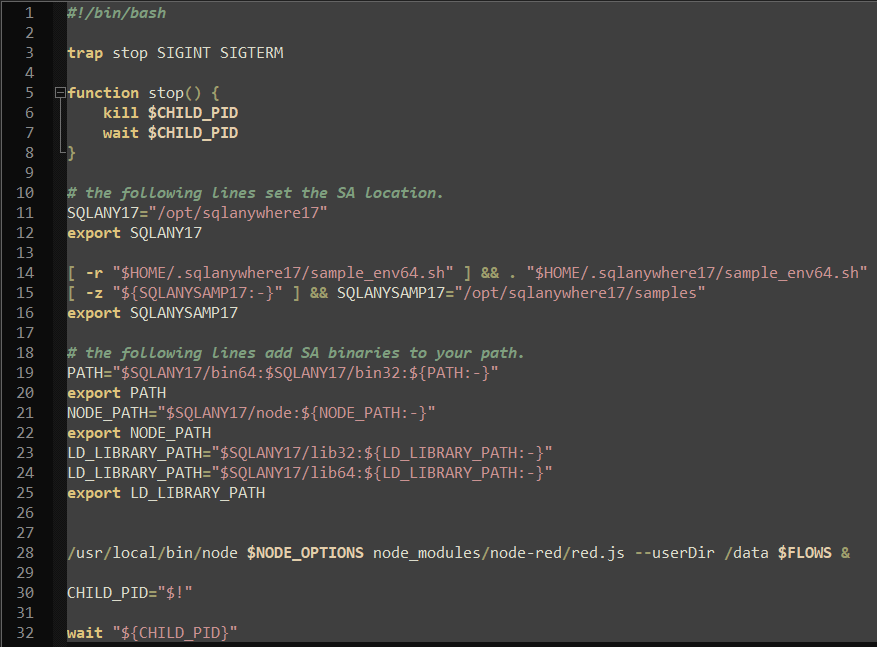

/opt/sqlanywhere17/bin64/and thesa_config.shscript. Copy the values and insert them in the/scripts/entrypoint.shfile in the docker-custom folder.

# the following lines set the SA location. SQLANY17="/opt/sqlanywhere17" export SQLANY17 [ -r "$HOME/.sqlanywhere17/sample_env64.sh" ] && . "$HOME/.sqlanywhere17/sample_env64.sh" [ -z "${SQLANYSAMP17:-}" ] && SQLANYSAMP17="/opt/sqlanywhere17/samples" export SQLANYSAMP17 # the following lines add SA binaries to your path. PATH="$SQLANY17/bin64:$SQLANY17/bin32:${PATH:-}" export PATH NODE_PATH="$SQLANY17/node:${NODE_PATH:-}" export NODE_PATH LD_LIBRARY_PATH="$SQLANY17/lib32:${LD_LIBRARY_PATH:-}" LD_LIBRARY_PATH="$SQLANY17/lib64:${LD_LIBRARY_PATH:-}" export LD_LIBRARY_PATH - Build the Docker container using the command:

./docker.debian.sh - Push the newly created Docker file to your repository.

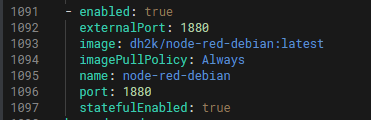

- Add the Docker container as a custom microservice in the UMH helm chart.