We have transitioned to new Benthos-based bridges and introduced an automatic Helm upgrade feature. This article explains these changes, the reasons behind them, the actions required—including steps for maintaining backward compatibility—and the benefits they offer.

Chapter 1: Transition to Benthos-Based Bridges

Background

Our previous data bridges were custom-written in Go. While they fulfilled their purpose, they had several limitations:

- Architecture Limitations: Dependencies used did not support ARM architectures, restricting deployment options on devices like Raspberry Pi.

- Maintenance Challenges: Custom code required significant effort to maintain and update.

- Limited Monitoring Tools: Users lacked effective tools to monitor and troubleshoot data flows.

Introducing Benthos and Data Flow Components (DFCs)

To address these challenges, we have transitioned to using Benthos, an open-source data streaming processor, as the foundation for our data bridges.

Benthos is a high-performance data stream processor that allows for flexible data input, processing, and output configurations through a single YAML file. It offers:

- Cross-Platform Compatibility: Supports ARM architectures, enabling deployment on a wider range of devices.

- Simplified Configuration: Reduces the need for custom code by leveraging configuration files.

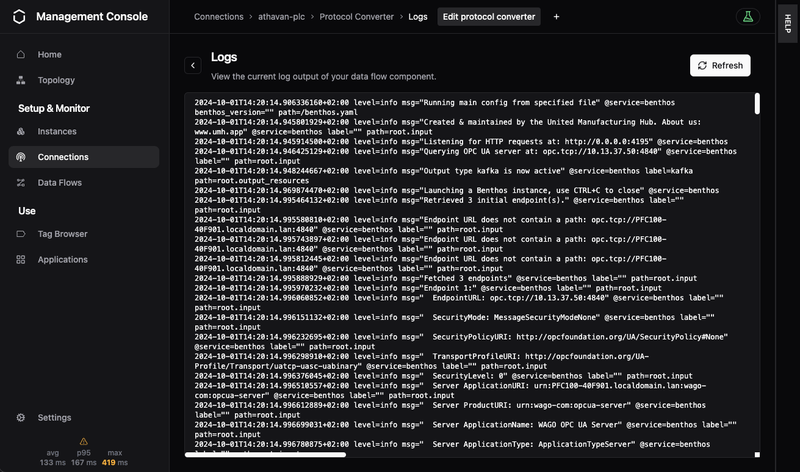

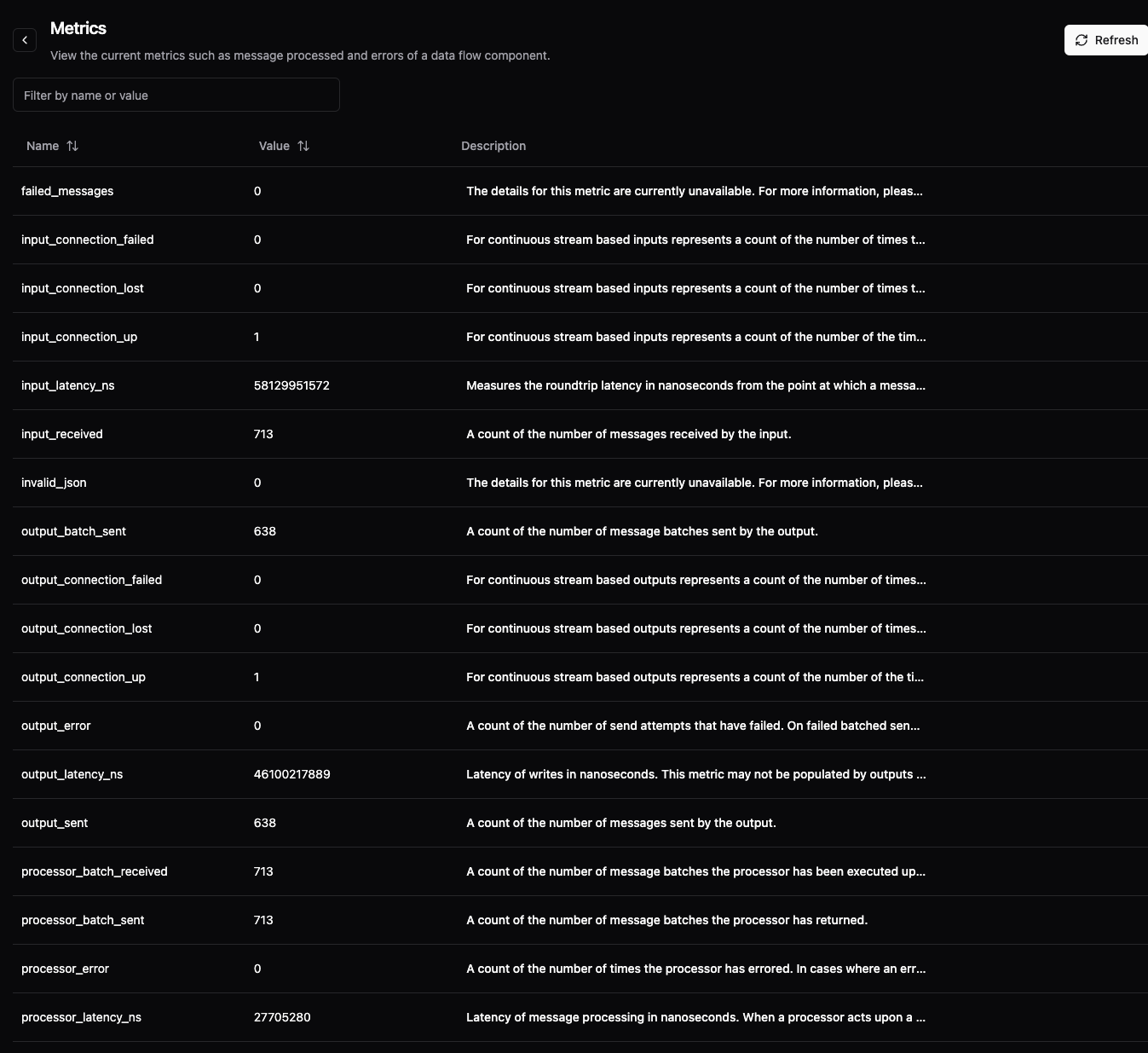

- Enhanced Visibility: Provides metrics and logging capabilities for better monitoring and troubleshooting.

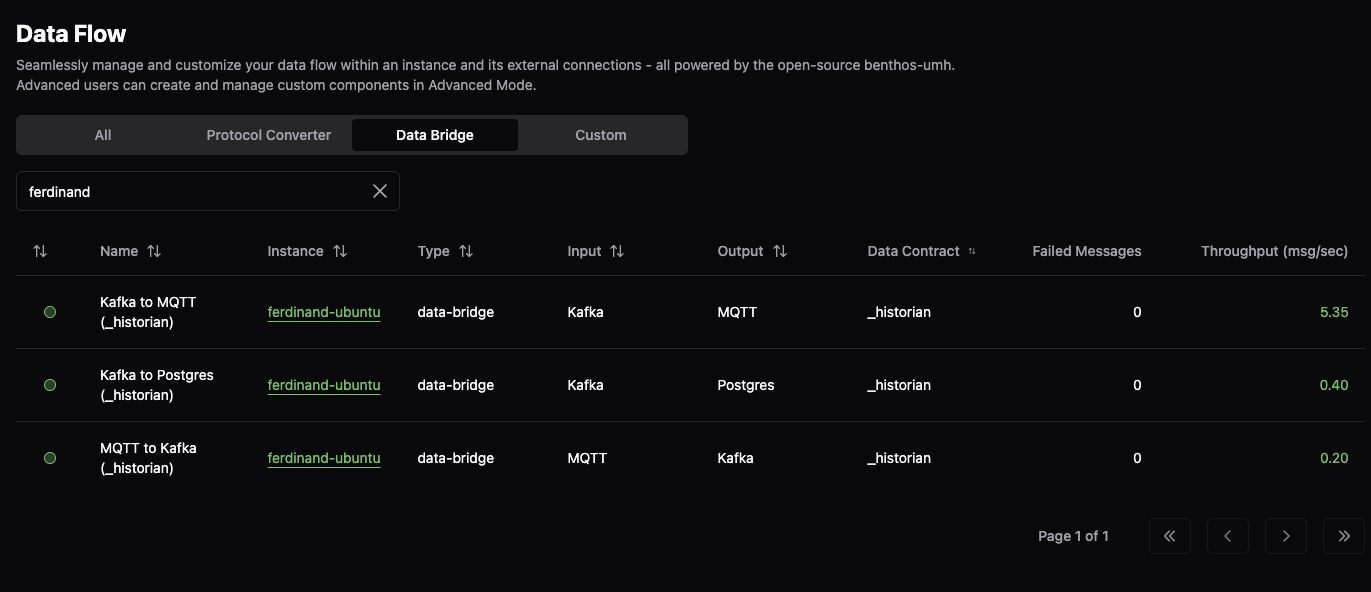

Data Flow Components (DFCs) are integral to data movement within UMH and are based on Benthos-UMH, our fork of Benthos. Types of DFCs include:

- Protocol Converters: Convert data between different protocols and the Unified Namespace (UNS).

- Data Bridges: Connect UNS core modules, such as bridging between MQTT and Kafka.

- Stream Processors: Process data within the UNS for tasks like tag renaming or data enrichment.

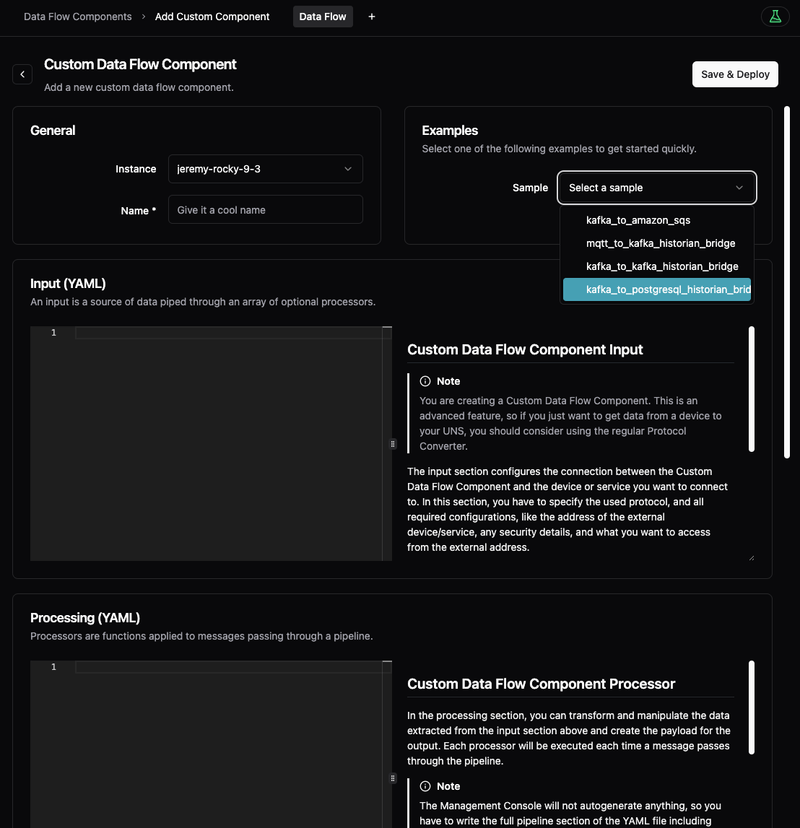

- Custom Components: Allow for flexible specification of inputs, processing logic, and outputs.

Reasons for the Change

We made this transition to Benthos-based bridges for several key reasons:

- Improved Compatibility: By removing dependencies that did not support ARM architectures, we eliminated limitations that previously restricted deployment on ARM devices.

- Enhanced Maintainability: Transitioning to Benthos reduces the amount of custom code, making the system easier to maintain and update.

- User-Friendly Configuration: The use of YAML configuration files makes customization more accessible, allowing non-developers to understand, view, and duplicate bridges.

- Better Monitoring and Troubleshooting: Users can view logs, metrics, and throughput of bridges directly in the management console, improving the ability to monitor and troubleshoot data flows.

- Unified Infrastructure: By standardizing on Benthos, we streamline management and monitoring, consolidating tools and reducing complexity within UMH.

Chapter 2: Actions Required for Users

For Users of the _historian Schema

No Action Required: The transition is seamless. Your data flows will continue to work without any changes.

For Users with custom Redpanda configurations.

This update will reset any topic specific configurations.

There is no automated step to update these.

For Users of the _analytics Schema

Deploy Custom Data Flow Components: You need to deploy custom DFCs to bridge _analytics data from MQTT to Kafka and from Kafka back to MQTT.

How to Proceed:

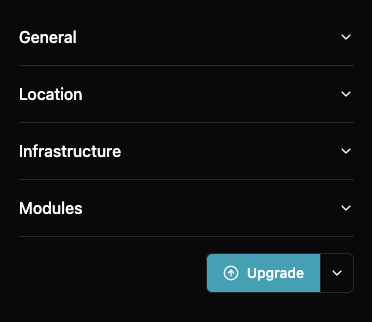

1. Access the Management Console: Navigate to the Custom Data Flow Components section.

2. Create a new DFC for MQTT to Kafka: Check out the mqtt_to_kafka_historian sample and adjust it for the _analytics schema.

3. Create a new DFC for the other way around: Do the same for the other way around for the kafka_to_mqtt_historian sample.

The existing kafka-to-postgresql-v2 service ist kept during the upgrade to keep saving data from kafka to postgres for the _analytics schema. Its ability to subscribe to _historian data was deactivated, so that it does not conflict with the new bridges.

For Users of the Old ia/ Topic Structure

- Enable Legacy Data Model via Helm: Adjust the Helm chart settings to enable the legacy data model by setting

_003_legacyConfig.enableLegacyDataModeltotrue:sudo helm --kubeconfig /etc/rancher/k3s/k3s.yaml upgrade -n united-manufacturing-hub --reuse-values --set _003_legacyConfig.enableLegacyDataModel=true united-manufacturing-hub united-manufacturing-hub/united-manufacturing-hub

- Deploy Custom Data Flow Components: You need to deploy custom DFCs to bridge

ia/#data from MQTT to Kafka and from Kafka back to MQTT. See “For users of the _analytics schema” for more information - There were no changes to factoryinsight

Chapter 3: Emphasizing Data Contracts and De-emphasizing the _analytics Schema

Understanding Data Contracts

Data Contracts are formal agreements specifying how data is handled, processed, and stored. They define the structure, format, semantics, quality, and usage terms of the data exchanged between providers and consumers—similar to APIs for data sharing.

Default UMH Data Contracts

_historian: Used for historical data storage, this contract specifies how time-series data is sent to and stored in the database._analytics: Previously half-implemented, it is now disabled by default for new users to simplify the system.

Reasons for Moving Away from _analytics

We are de-emphasizing the _analytics schema to align with our product vision of providing well-functioning and thoroughly tested features. By focusing on robust schemas like _historian, we reduce complexity and improve system reliability. Existing users who rely on _analytics can still activate it manually, but new users will start with a cleaner setup emphasizing stability and performance.

Custom Data Contracts

We encourage users to define their own data contracts to meet specific needs, aligning with our commitment to empower power users while maintaining a robust default setup. With the new Benthos-based bridges and Data Flow Components, you can easily duplicate existing bridges and reuse configurations we’ve developed, tailoring them to your requirements.

Example: Creating a Custom _ERP Data Contract

Suppose you have data coming in via a custom data contract called _ERP, and you want to store it in TimescaleDB:

- Create Custom Data Bridges:

- Bridge MQTT to Kafka: Deploy a DFC to bridge _ERP data from MQTT to Kafka.

- Bridge Kafka to PostgreSQL: Duplicate the existing kafka-to-postgresql bridge used for _historian and adjust it for the _ERP schema.

- Optional - Bridge Kafka back to MQTT: If required, deploy a DFC to bridge data from Kafka back to MQTT for the _ERP schema.

- Define the Schema: Specify how the data should be stored, including table creation and migrations, using Benthos configurations similar to our default setups.

- Deploy and Monitor: Use the management console to deploy the bridges and monitor their performance, leveraging the same tools and configurations provided for default bridges.

This approach allows you to leverage the robustness of our existing solutions while customizing them to fit your specific data handling needs, without starting from scratch.

Chapter 4: Automatic Helm Upgrade and Streamlined Helm Chart

Automatic Helm Upgrade

We have introduced an automatic Helm upgrade feature to simplify the update process. When you update the instance in the management console, the system will automatically perform the Helm upgrade.

Reasons for the Change

- Simplified Upgrade Process: Reduces manual steps, making it easier to keep your UMH instance up to date.

- Reduced Potential for Errors: Minimizes the risk of user errors during upgrades.

- Improved System Reliability: Ensures all users have the latest compatible versions of core components.

New Feature Highlights

- One-Click Upgrade: Upgrade UMH with a single click in the management console, simplifying the update process and reducing manual effort.

- Components Upgraded: The automatic upgrade updates core components like HiveMQ, Redpanda, and others to the latest tested versions. Automated testing ensures that these components work together and are compatible before release.

- Exceptions: Node-RED and Grafana: These require manual upgrades because they rely on user-installed plugins that might be incompatible with automatic updates. We chose not to automate their upgrades so that you can maintain control over them and ensure your custom plugins and configurations remain intact.

Streamlined Helm Chart

We have simplified the Helm chart by removing unused services and legacy structures, making the system more efficient and easier to manage:

- Removed Services:

- barcodereader: Removed due to lack of use.

- sensorconnect: Now integrated into benthos-umh.

- databridge: Replaced by benthos based bridge.

- kafkabridge: Replaced by benthos based bridge.

- mqtt-kafka-bridge: Replaced by benthos based bridge.

- tulip-connector: Removed due to lack of use.

- Modified Services

- kafka-to-postgresqlv2: Removed _historian handler, this is now handled by a benthos service.

- Legacy Structures Removed:

- VerneMQ Service Name: Removed since we have been using HiveMQ since version 0.9.10.

Conclusion

- Benthos-Based Bridges: We have enhanced reliability and user experience through the adoption of Benthos-based Data Flow Components (DFCs), improving compatibility, maintainability, and providing better monitoring tools.

- Focus on Data Contracts: By emphasizing the

_historianschema and allowing for custom data contracts, we have de-emphasized the_analyticsschema to align with our product vision and simplify the system. - Automatic Helm Upgrade: We have streamlined the upgrade process via the management console, making it easier to keep your UMH instance up to date with the latest features and improvements.

We are committed to helping you make the most of these updates. If you have any questions or need assistance, please do not hesitate to reach out through our community channels.