“We wanted to make it as easy as possible for anyone to get started with industrial data integration — without giving up scalability or reliability.” – Alexander Krüger, Co-Founder & CEO, United Manufacturing Hub

Deploying industrial data infrastructure has often been a balancing act between complexity and capability. In this webinar, Getting Started with UMH Core – Core functionalities collapsed in a single container, the UMH team introduces UMH Core, a streamlined way to run the essential components of the United Manufacturing Hub in a single, process-isolated Docker container.

From tackling the pain points of Kubernetes-heavy deployments to demonstrating how UMH Core enables faster setup, broader compatibility, and robust data governance, this session walks you through the architecture, deployment scenarios, and hands-on configuration of the new platform. Whether you’re building your first Unified Namespace or scaling across multiple sites, UMH Core offers a simpler starting point without compromising enterprise-grade features.

Webinar Agenda

Introductions

- Speakers: Mateusz Warda (Customer Success) and Alexander Krüger (Co-Founder, CEO)

- Quick intro to United Manufacturing Hub, the speakers’ backgrounds, and the purpose of the webinar.

The Challenge: UMH Classic Complexity

- Limitations of Kubernetes-based deployments for first-time users.

- Pain points: multi-step installation, OS requirements, and reduced flexibility in custom environments.

The Solution: UMH Core – Single Container Deployment

- Overview of UMH Core’s simplified Docker-based setup.

- How process isolation with S6 improves stability and scalability.

- Balancing ease of use with enterprise-level deployment capabilities.

Architecture & Deployment Scenarios

- Core components: Benthos-UMH, Kafka, and optional TimescaleDB, Grafana, MQTT.

- Running UMH Core in segmented networks, on industrial firewalls, Raspberry Pis, and even modern PLCs.

Core vs Classic & Roadmap

- How UMH Core complements UMH Classic and planned convergence of features.

- Guidance on when to choose Core or Classic for new projects.

Live Demo: Installing UMH Core

- Using the Management Console to select Core, configure environment variables, and run via Docker.

- Connecting devices and setting up the first data bridge.

Data Modeling & Governance

- Creating standardized data models to enforce structure and constraints.

- Using data contracts to maintain consistency across multiple sites and integrations.

Stream Processing & Versioning

- Mapping data points to contracts and validating them with Kafka schemas.

- Version control for data models to support evolving requirements without breaking existing integrations.

Configuration as Code

- Managing UMH Core setups with config files, Git integration, and CI/CD pipelines.

- Balancing drag-and-drop usability with automation for scale.

Advanced Features

- Data transformations at the bridge level.

- Integration with Prometheus and Grafana for logging, metrics, and alerting.

Q&A & Closing Remarks

- Community questions, deployment tips, and how to get support via Discord.

IT / OT Integration Platform for Industrial DataOps

Connect all your machines and systems with our Open-Source IT/OT Integration Platform to make all shop-floor data accessible at a single point.

Chapters

00:00 – Welcome & Introductions

02:15 – What is UMH & Unified Namespace

04:50 – Challenges with UMH Classic

07:15 – Why UMH Core

09:40 – Deployment Scenarios & Architecture

14:25 – Core vs Classic & Future Roadmap

16:45 – Live Installation Demo

19:05 – Device Connection & Logging

21:25 – Data Modeling & Governance

28:30 – Stream Processing & Data Contracts

32:15 – Versioning & Scaling Data Models

34:40 – Configuration as Code

36:55 – Transformations & Advanced Features

39:20 – Q&A & Closing Remarks

Transcript

00:00 – Welcome & Introductions

Mateusz Warda:

Hello everyone, and welcome to our webinar Introduction to UMH Core. Today I’m hosting with my co-host Alex. My name is Mateusz, and we’ll start with a quick introduction to ourselves and UMH for anyone new to our webinar series. Then we’ll look at the challenges we’ve identified while working with customers over the last year, how UMH Core addresses them, and the specific features we’ve added for data modeling.

Alexander Krüger:

Thanks, Mateusz. I’m Alex, co-founder and CEO of United Manufacturing Hub. My background is in mechanical engineering, and I spent two and a half years in system integration before starting UMH five years ago with Jeremy. I’m excited to share what we’ve learned and how that led to creating UMH Core.

Mateusz Warda:

I’m also a mechanical engineer by background, but spent five years in management consulting doing digital transformation. Now I help our customers in pilots and scaling. Whenever you have questions about success stories, integrations, or use cases, feel free to reach out.

02:15 – What is UMH & the Unified Namespace

Mateusz Warda:

Most of you are familiar with the Unified Namespace (UNS) and UMH’s role in it. Traditionally, industrial integrations result in complex, “spaghetti” architectures. The UNS solves this by providing a single source of truth: integrating once, standardizing, modeling the data, and democratizing access.

UMH includes three key elements:

- Connectivity – supporting IT and OT interfaces.

- Data Modeling – standardization, governance, and specific models.

- Historian & Use Cases – building business value on top of the infrastructure.

We integrate alongside the automation pyramid, adding business value without replacing existing systems, and package everything — connectivity, broker, historian, visualization — into a single installation command.

04:50 – Challenges with UMH Classic

Alexander Krüger:

UMH Classic was designed for Kubernetes (K3s) deployments, which has benefits like scalability, high availability, and version control. But there were two main issues:

- Harder to Get Started – You needed specific OS prerequisites, then had to install Kubernetes and UMH into it. This multi-step process made onboarding harder, especially for open-source users.

- Flexibility Limits – If customers had their own Kubernetes distribution (e.g., OpenShift), integration required additional configuration.

We considered the opposite approach: a single Docker container. That’s easier to start, OS-independent, and low-complexity (docker run and done). But the drawback is that if one process fails, it can take down the whole stack.

07:15 – Why UMH Core

Alexander Krüger:

With UMH Core, we combined both worlds. It’s a single, easy-to-deploy Docker container but internally uses S6 process management to isolate services. Kafka, Benthos configurations, and other processes run independently, so if one fails, it doesn’t bring down the rest.

This design removes Kubernetes pod limits, making UMH Core more scalable in some cases. While Classic will continue to be supported, we’re merging both user journeys over time.

09:40 – Deployment Scenarios & Architecture

Mateusz Warda:

UMH Core includes:

- Benthos-UMH – protocol converters, custom flows, stream processing.

- Kafka – central for data storage and forwarding.

- Optional Components – TimescaleDB, Grafana, MQTT.

It can run in diverse environments: segmented networks, industrial firewalls, Raspberry Pis, Revolution Pis, and even modern PLCs with container support. This drastically reduces hardware and OS requirements.

14:25 – Core vs Classic & Roadmap

Alexander Krüger:

We will fully support UMH Classic. UMH Core extends it, and over time, features will converge. Some Classic components will be replaced with Core equivalents, ensuring easy migration and automation.

Recommendation for new projects:

- If you need plugins like Grafana and TimescaleDB now → start with Classic.

- If you just need to move data or build a UNS without a database → start with Core.

16:45 – Live Installation Demo

Mateusz Warda:

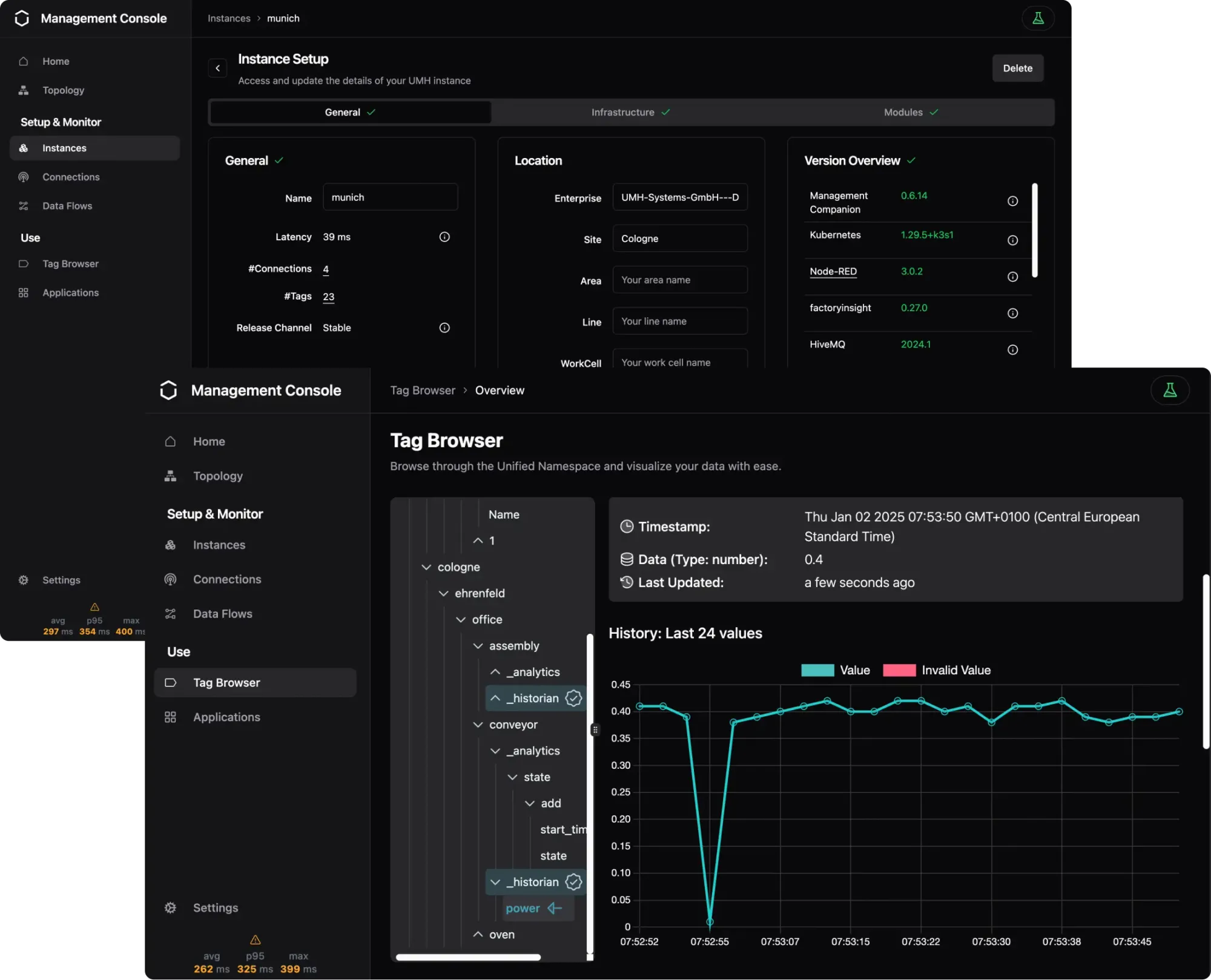

From the Management Console, select Core, choose your release channel, copy the installation command, and run it via Docker. The system will pull the image and launch.

Once running, you can:

- View instance details and logs.

- Connect devices through bridges.

- Configure node IDs and processing rules directly in the UI.

21:25 – Data Modeling & Governance

Alexander Krüger:

Data models are templates defining required data points, types, and constraints. They act as a governance layer to prevent “drift” over time, ensuring consistent payloads for applications like MES or ERP systems.

Benefits include:

- Enforced standards across sites.

- Reliable API integrations.

- Simplified scaling of the UNS.

28:30 – Stream Processing & Data Contracts

Mateusz Warda:

Stream processors map incoming data to data contracts, which validate it against the model. Kafka’s schema registry ensures tags, keys, and formats match expectations.

A key benefit is version control — older integrations remain functional when models are updated.

34:40 – Configuration as Code

Alexander Krüger:

All configurations are stored as files, so you can:

- Manage them in Git.

- Use CI/CD pipelines for deployment.

- Automate repetitive setup using templates.

Power users can bypass the drag-and-drop interface and work directly with config files, enabling large-scale, repeatable deployments.

36:55 – Advanced Features

Mateusz Warda:

Transformations can be applied at the bridge level (e.g., converting Celsius to Fahrenheit). Integration with Prometheus and Grafana enables advanced logging, metrics, and alerting.

39:20 – Q&A & Closing

Alexander Krüger:

We answered questions on:

- Transformation placement (bridge level).

- Using Git for config management.

- CI/CD compatibility.

For support, join our Discord community and reach out directly.