An Architect's Guide to Data Modeling in Manufacturing in UNS-based Architectures

C) Data Modeling in OLTP Databases

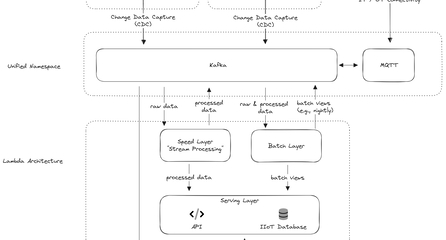

In the realm of manufacturing data management, a key focus is not just on the real-time use of data but also on its storage for later analysis. This is particularly relevant in our discussions on OLTP and OLAP systems, which meet the distinct data needs of frontline workers and business analysts. More details can be found in our UMH blog article.

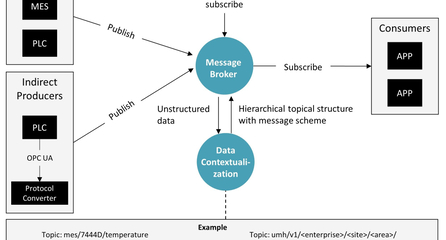

Our goal is to store specific data points from the Unified Namespace's conceptual framework, such as umh/v1/enterprise/site/area/productionLine/workCell/originID/_schema. There are two key schemas involved in storing this data: _historian and _analytics.

We selected TimescaleDB's hypertable functionality for storing time-series data, valuing its reliability, scalability, and smooth integration into our IT/OT framework. Beyond time-series data, we also manage relational data, and TimescaleDB efficiently supports both, leveraging its underlying PostgreSQL capabilities. This decision, as detailed in our ADRs, represents a harmonious blend of technical sophistication and operational practicality.

Context: Management of large-scale time-series data within UMH.

Challenge: Identifying a database solution that provides reliability, scalability, maintainability, and integrates seamlessly with existing IT/OT practices.

Decision: TimescaleDB is chosen for its hypertable functionality, which offers automated partitioning and a suite of performance optimization features. Historians are acknowledged for their role but are not chosen as the sole solution.

Neglected Alternatives:

- Manual Partitioning (pg_partman): Although pg_partman aids in managing partitions within PostgreSQL, it requires additional manual setup and ongoing maintenance, which can introduce complexity and the risk of human error. Moreover, it lacks the advanced automation and query optimization features provided by TimescaleDB hypertables. More info.

- Historians: Traditional historians like OSIsoft PI or Canary Labs offer interfaces familiar to OT engineers but tend to lack integration with modern IT practices. Their deployment and operational methods can pose challenges for IT teams, such as the absence of standard monitoring and logging features and the necessity for manual on-premises setup. More info.

- InfluxDB: This time-series database does not offer the same level of maturity as TimescaleDB (InfluxDB has now been rewritten 3 times from scratch) and requires users to learn Flux, a new query language, instead of the more widely known SQL. It also provides scalability features primarily in its paid version, potentially leading to vendor lock-in concerns. More info

Objective: To employ a database that ensures system integrity, ease of data management, and robustness in handling large datasets.

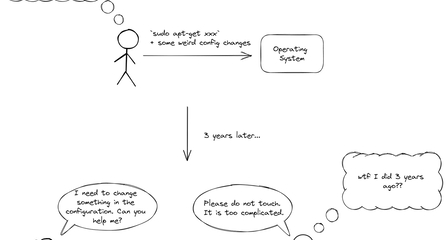

Trade-off: Overlooking the simplicity of manual partitioning and the OT familiarity of Historians for a more comprehensive, IT-compatible solution.

Mitigation Strategy: Provide extensive documentation and support to bridge the familiarity gap for OT professionals and ensure smooth integration of TimescaleDB within UMH's data ecosystem.

Rationale:

- The mature, stable nature of TimescaleDB, and its SQL compatibility, offers a reliable and scalable solution.

- Hypertables provide a simplified and automated approach to partitioning compared to manual methods.

- The adoption of TimescaleDB provides a maintainable solution that adheres to IT best practices and meets the operational requirements of OT engineers.

Table 1: asset

In both _historian and _analytics schemas, we reference an asset defined in the ISA95 model, like an enterprise or a workcell within a production line. This leads us to the creation of our first table:

CREATE TABLE asset (

id SERIAL PRIMARY KEY,

enterprise TEXT NOT NULL,

site TEXT DEFAULT '' NOT NULL,

area TEXT DEFAULT '' NOT NULL,

line TEXT DEFAULT '' NOT NULL,

workcell TEXT DEFAULT '' NOT NULL,

origin_id TEXT DEFAULT '' NOT NULL,

UNIQUE (enterprise),

UNIQUE (enterprise, site),

UNIQUE (enterprise, site, area),

UNIQUE (enterprise, site, area, line),

UNIQUE (enterprise, site, area, line, workcell),

UNIQUE (enterprise, site, area, line, workcell, origin_id)

);In constructing the asset table, we prioritize easy data retrieval. Our approach, detailed in the ADRs, includes using a denormalized SQL table for simplicity and applying unique constraints for data integrity.

Context: Efficiently accessing stored data in TimescaleDB in the United Manufacturing Hub (UMH) using SQL.

Challenge: Balancing the need for straightforward queries with the potential for data redundancy and update complexities.

Decision: Adopt a single, denormalized table structure to store asset information, enabling straightforward queries without joins.

Neglected Alternatives:

- Normalized Database Schema: Separating asset attributes into distinct tables. This approach could reduce redundancy but would require complex joins for queries. For example, asset details might be spread across multiple tables (e.g., enterprises, sites, areas), necessitating multi-table joins to retrieve comprehensive asset information.

Objective: Simplify and streamline asset data queries while maintaining system efficiency and maintainability.

Trade-off: The denormalized approach accepts some data redundancy and requires careful update management to ensure data consistency.

Mitigation Strategy: Provide clear documentation and user guidance for query construction and updates.

Rationale:

- The manageable size of the table (10k rows maximum) mitigates concerns around redundancy.

- The ease of constructing and reading queries takes precedence given the scope and scale of data.

- Simplified querying methods via get_asset_id function or direct JOIN operations offer ease of use:

- Function-based retrieval avoids complex joins.

- Direct joins offer an alternative method that leverages SQL's relational capabilities.

Context: Managing unique identification of assets in the United Manufacturing Hub (UMH).

Challenge: Enforcing uniqueness in asset identification while accommodating optional fields within the asset hierarchy.

Decision: Implement unique indexes with COALESCE functions to ensure uniqueness across various combinations of asset identifiers.

Neglected Alternatives: Waiting for TimescaleDB's compatibility with PostgreSQL 15, which could potentially offer more straightforward mechanisms for enforcing uniqueness.

Objective: To maintain strict uniqueness in asset identification without relying on PostgreSQL 15 features not yet supported by TimescaleDB.

Trade-off: Introducing complex unique index expressions instead of potentially cleaner solutions available in PostgreSQL 15.

Mitigation Strategy: Utilize COALESCE within unique indexes to handle NULL values effectively, ensuring that asset uniqueness is preserved even when some attributes are unspecified.

Rationale:

- The chosen approach addresses the need for a reliable method to enforce uniqueness in the absence of PostgreSQL 15 features.

- This ensures data integrity and prevents duplicate records for assets, crucial for the accuracy of asset tracking and management.

Table 2: tag

The _historian schema is structured to store time-series data effectively in TimescaleDB. When payloads have Keys with a Number as value, the key for that asset will be the name, and the value will be the value. The originid will include information about the origin of each tag (e.g., the origin PLC), though it is not implemented yet.

CREATE TABLE tag (

timestamp TIMESTAMPTZ NOT NULL,

name TEXT NOT NULL,

origin TEXT NOT NULL,

asset_id INT REFERENCES asset(id) NOT NULL,

value REAL,

UNIQUE (name, asset_id, timestamp)

);

SELECT create_hypertable('tag', 'timestamp');

CREATE INDEX ON tag (asset_id, timestamp DESC);

CREATE INDEX ON tag (name);Context: Effective storage and querying of time-series data for assets within the United Manufacturing Hub (UMH).

Challenge: Ensuring data integrity, query performance, and the ability to accurately track time-series data per asset.

Decision: Structuring the tag table with a focus on time-series data, utilizing TimescaleDB's hypertable functionality, and indexing to optimize query performance.

Objective: To create a database schema that enables efficient time-series data storage and retrieval, while maintaining data integrity and simplifying asset tracking.

Trade-off: Potentially increased complexity in table structure and index management.

Technical Details:

- The tag table is structured with timestamp, name, origin, and asset_id to align with time-series data requirements.

- The table is converted into a hypertable to leverage TimescaleDB's time-series optimization. Indexes on asset and timestamp, as well as on name, improve query performance for fetching all values for a certain asset as well as listing all potential tagnames for a certain asset.

Rationale:

- TimescaleDB's hypertable supports efficient time-series data management with automatic partitioning based on time intervals.

- Indexing strategies are employed to enhance the speed and efficiency of common queries, such as retrieving the latest data points for an asset or listing all tag names.

- The unique constraint on name, asset, and timestamp prevents data duplication, ensuring each asset's data points are distinct and accurate over time.

- Users have two simple options to query data

- SELECT name, value, timestamp

FROM tag

WHERE asset = get_asset_id(

'enterprise1',

'site1',

'area1',

'line1',

'workcell1',

'originid1'

); - SELECT tag.name, tag.value, tag.timestamp

FROM tag

JOIN asset ON tag.asset = asset.id

WHERE asset.enterprise = 'enterprise1'

AND asset.site = 'site1'

AND asset.area = 'area1'

AND asset.line = 'line1'

AND asset.workcell = 'workcell1'

AND asset.origin_id = 'originid1';

- SELECT name, value, timestamp